Reversing a Neural Network

In this post, we are going to explore how to “reverse” a neural network - also known as feature visualization.

The traditional way to train a neural network is to optimize the weights to achieve a task:

When we reverse a neural network, we optimize the input instead of optimizing the weights.

This will generate an image that triggers what activates the part of the network that we choose to target. It allows us to probe what the network focuses on.

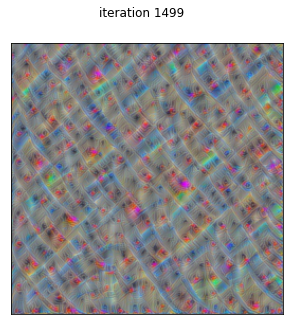

Earlier layers of the network show intricate patterns:

Later figures show some sometimes creepy looking alien animals, some mish-mash of what the network has learned to recognize:

We can even animate (and visualize) the process from noise to convergence:

There are a lot of tricks that go into doing this reversal, and I wanted to try my own “simple” implementation. I used VGG19 because the linear structure made it easy to dissect.

There is a lot of really nice work on the subject, far more advanced than what I present here.

Here is an excellent in-depth intro to the subject that goes much further than this post.

More recently, it was used to study multi-modal inputs in DALL-E.

I made all the code available, so you can try looking for weird creatures yourself!

Comments