ControlNet Game of Life

Update: Follow the discussion on HackerNews

TL;DR

In this post, we we will be using ControlNet to animate the Game of Life.

Try it yourself

Skip to the next section to get to the explanation of how it works.

You can try running it on the HuggingFace 🤗 space, or on the Colab link. They both have GPU usage limits, so you can play around with both and see what works for you.

How it works

ControlNet

Fundamentally, we are using stable diffusion to generate the images. However, vanilla stable diffusion doesn’t allow for the preservation of the grid of cells, especially not as we iterate through the game.

Instead, we use a variant of stable diffusion called ControlNet. ControlNet has the nice property that you can feed it both a start image as well as a “control image”, which encodes the general outlines of the shape you want to preserve.

In practice, this can be done using segmentation maps, canny edges, etc. With it, we can preserve the shapes while letting the model generate the fill. Here, ControlNet is used to map a painting to another painting while preserving the original structure.

QR Code ControlNet

Shortly after ControlNet, a fun use-case people thought of was to finetune ControlNet to preserve the semantics of QR Codes. Think of it; if you can preserve the shapes of the tiles enough, you can generate actually fun QR codes, it’s so simple yet powerful:

Connecting the Dots

Inspired by this idea, I thought it would be fun to connect the dots: after all, the game of life can easily be thought of as a QR Code grid, as I wrote about in a previous post.

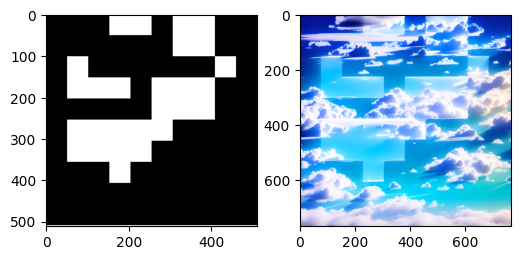

We pretty much just need to generate a control image from each game of life state, and use it to guide our ControlNet model. For example:

Now we can iterate on each cell of the game, and for each one generate an image. Note that there is nothing guaranteeing temporal consistency, we are just hoping for the best by controlling the seeds. Here is the overall pseudo-code that we then need to implement:

# Pseudo-code for generating a gif

controlnet = ControlNet() # Load the model

game_of_life = GameOfLife(seed=42) # Initialize the game of life

source_image = load_image(IMAGE_URL) # Load a source image (e.g. a volcano)

num_steps = 50 # Number of steps to iterate through the game

frames = [] # Collect all generated frames here

for step in range(num_steps):

game_of_life.step() # Play a step in the game of life

grid_image = game_of_life.grids[-1].to_image() # Generate an image from the latest grid

frame = controlnet.generate(source_image=source_image, control_image=grid_image, **controlnet_kwargs) # Generate the controlnet frame

frames.append(frame)

render_gif(frames)

We can start with plenty of different types of images, for example, here is one with the famous “Tsunami by hokusai” theme:

Comments